Your Private AI Server on Mac

Run powerful AI models locally with MLX-GUI. Privacy-focused, OpenAI-compatible, and beautifully simple.

Run powerful AI models locally with MLX-GUI. Privacy-focused, OpenAI-compatible, and beautifully simple.

Optimized for M1/M2/M3/M4 chips with MLX acceleration for blazing-fast inference.

Your data never leaves your Mac. Complete control and privacy for sensitive workloads.

Drop-in replacement for OpenAI API. Use with any existing tools and libraries.

Native macOS app with system tray integration. Manage models with a simple web interface.

Text generation, vision models, audio transcription, and embeddings all in one place.

No Python, no dependencies. Just download and run. HuggingFace integration built-in.

Get the latest release for your Mac. Works on any Apple Silicon Mac with 8GB+ RAM.

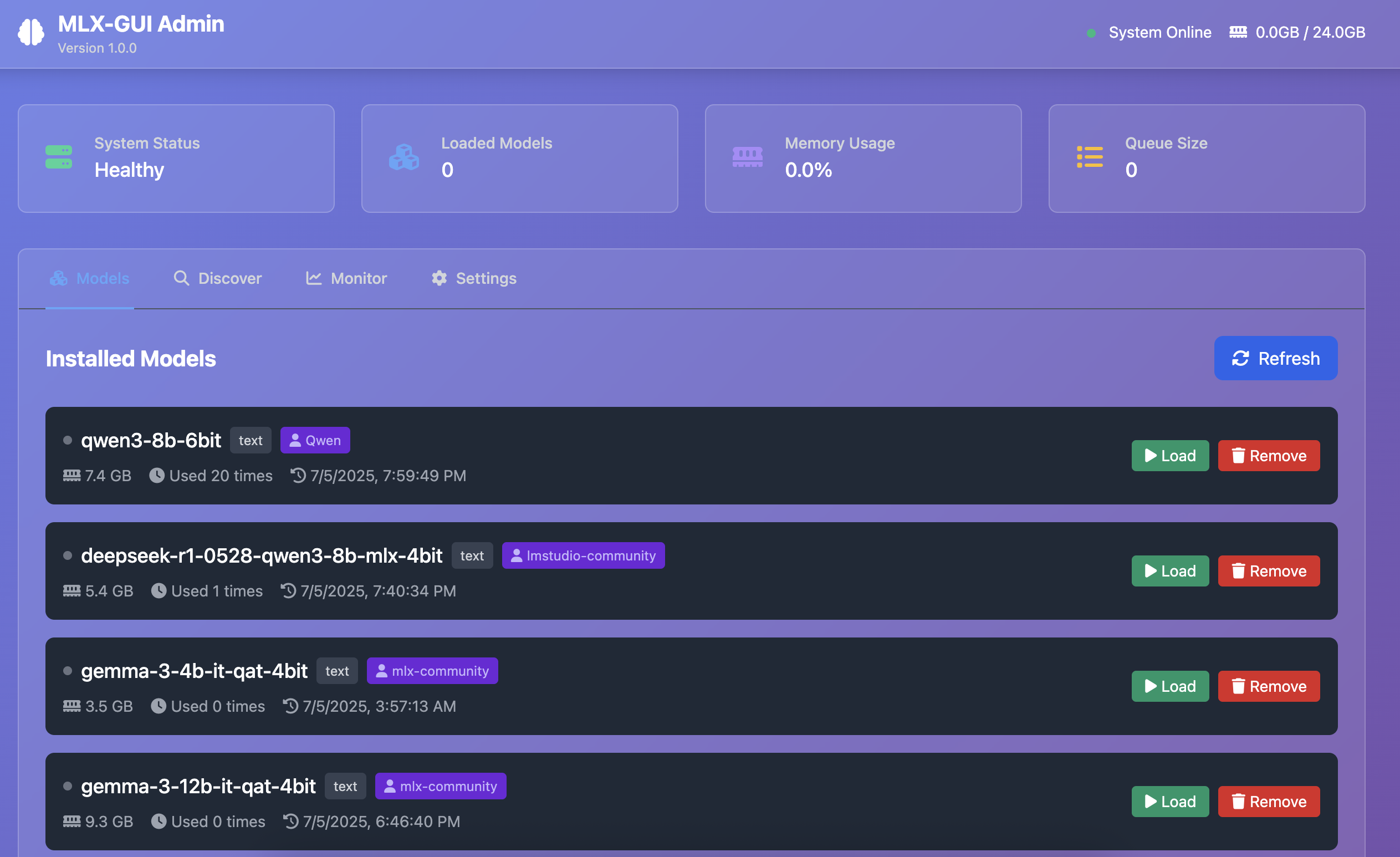

Browse and install models from HuggingFace with one click through the web UI.

Use the OpenAI-compatible API with your favorite tools or the built-in chat interface.

Intuitive conversation view with syntax highlighting and markdown support

Browse and install thousands of models from HuggingFace with one click

Configure memory limits, API endpoints, and model parameters easily

Monitor performance, memory usage, and active models at a glance

See what developers are building with MLX-GUI

A powerful transcription app built on MLX-GUI that provides fast, accurate speech-to-text conversion using local AI models.

View on GitHub# Just change the base URL - that's it!

from openai import OpenAI

client = OpenAI(

base_url="http://localhost:1234/v1",

api_key="mlx-gui"

)

response = client.chat.completions.create(

model="your-local-model",

messages=[{"role": "user", "content": "Hello!"}]

)Join thousands of developers running AI locally with MLX-GUI.